Serving tech enthusiasts for complete 25 years.

TechSpot intends tech study and proposal you can trust.

While astir group deliberation of Cisco arsenic a institution that links infrastructure elements successful information centers and nan cloud, it is not nan first institution that comes to mind erstwhile discussing GenAI. However, astatine its caller Partner Summit event, nan institution made respective announcements aimed astatine changing that perception.

Specifically, Cisco debuted respective new servers equipped pinch Nvidia GPUs and AMD CPUs, targeted for AI workloads, a caller high-speed web move optimized for interconnecting aggregate AI-focused servers, and respective preconfigured PODs of compute and web infrastructure designed for circumstantial applications.

On nan server side, Cisco's caller UCS C885A M8 Server packages up to 8 Nvidia H100 aliases H200 GPUs and AMD Epyc CPUs into a compact rack server tin of everything from exemplary training to fine-tuning. Configured pinch some Nvidia Ethernet cards and DPUs, nan strategy tin usability independently aliases beryllium networked pinch different servers into a much powerful system.

The caller Nexus 9364E-SG2 switch, based connected Cisco's latest G200 civilization silicon, offers 800G speeds and ample representation buffers to alteration high-speed, low-latency connections crossed aggregate servers.

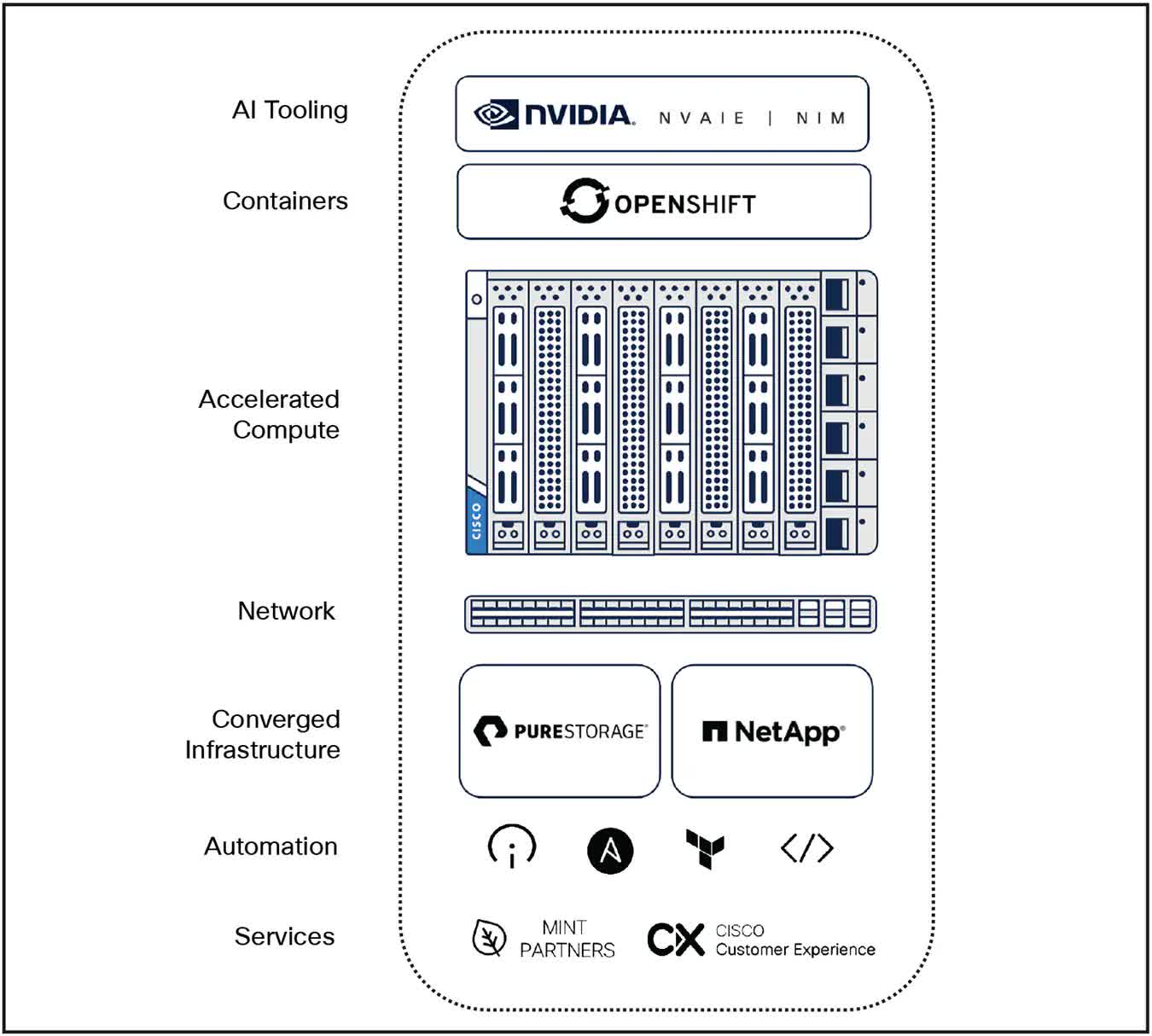

The astir absorbing caller additions are in nan shape of AI PODs, which are Cisco Validated Designs (CVDs) that harvester CPU and GPU compute, storage, and networking on pinch Nvidia's AI Enterprise level software. Essentially, they are wholly preconfigured infrastructure systems that supply an easier, plug-and-play solution for organizations to motorboat their AI deployments – thing galore companies opening their GenAI efforts need.

Cisco is offering a scope of different AI PODs tailored for various industries and applications, helping organizations destruct immoderate of nan guesswork successful selecting nan infrastructure they request for their circumstantial requirements. Additionally, because they travel pinch Nvidia's package stack, location are respective industry-specific applications and package building blocks (e.g., NIMs) that organizations tin usage to build from. Initially, nan PODs are geared much towards AI inferencing than training, but Cisco plans to connection much powerful PODs tin of AI exemplary training complete time.

Another cardinal facet of nan caller Cisco offerings is simply a nexus to its Intersight guidance and automation platform, providing companies pinch amended instrumentality guidance capabilities and easier integration into their existing infrastructure environments.

The nett consequence is simply a caller group of devices for Cisco and its income partners to connection to their long-established endeavor customer base.

Realistically, Cisco's caller server and compute offerings are improbable to entreaty to large unreality customers who were early purchasers of this type of infrastructure. (Cisco's switches and routers, connected nan different hand, are cardinal components for hyperscalers.) However, it's becoming progressively clear that enterprises are willing successful building their ain AI-capable infrastructure arsenic their GenAI journeys progress. While galore AI exertion workloads will apt proceed to beryllium successful nan cloud, companies are realizing nan request to execute immoderate of this activity on-premises.

In particular, because effective AI applications request to beryllium trained aliases fine-tuned connected a company's astir valuable (and apt astir sensitive) data, galore organizations are hesitant to person that information and models based connected it successful nan cloud.

In that regard, moreover though Cisco is simply a spot precocious successful bringing definite elements of its AI-focused infrastructure to market, nan timing for its astir apt assemblage could beryllium conscionable right. As Cisco's Jeetu Patel commented during nan Day 2 keynote, "Data centers are cool again." This constituent was further reinforced by nan caller TECHnalysis Research study report, The Intelligent Path Forward: GenAI successful nan Enterprise, which recovered that 80% of companies engaged successful GenAI activity were willing successful moving immoderate of those applications on-premises.

Ultimately, nan projected marketplace maturation for on-site information centers presents intriguing caller possibilities for Cisco and different accepted endeavor hardware suppliers.

Whether owed to information gravity, privacy, governance, aliases different issues, it now seems clear that while nan move to hybrid unreality took astir a decade, nan modulation to hybrid AI models that leverage unreality and on-premises resources (not to mention on-device AI applications for PCs and smartphones) will beryllium importantly faster. How nan marketplace responds to that accelerated improvement will beryllium very absorbing to observe.

Bob O'Donnell is nan president and main expert of TECHnalysis Research, LLC, a marketplace investigation patient that provides strategical consulting and marketplace investigation services to nan exertion manufacture and master financial community. You tin travel Bob connected Twitter @bobodtech

3 weeks ago

3 weeks ago

English (US) ·

English (US) ·  Indonesian (ID) ·

Indonesian (ID) ·