Serving tech enthusiasts for complete 25 years.

TechSpot intends tech study and proposal you can trust.

What conscionable happened? AMD's Instinct MI300 has quickly established itself arsenic a awesome subordinate successful nan AI accelerator market, driving important gross growth. While it can't dream to lucifer Nvidia's ascendant marketplace position, AMD's advancement indicates a promising early successful nan AI hardware sector.

AMD's precocious launched Instinct MI300 GPU has quickly go a monolithic gross driver for nan company, rivaling its full CPU business successful sales. It is simply a important milestone for AMD successful nan competitory AI hardware market, wherever it has traditionally lagged down manufacture leader Nvidia.

During nan company's latest net call, AMD CEO Lisa Su said that nan information halfway GPU business, chiefly driven by nan Instinct MI300, has exceeded first expectations. "We're really seeing now our [AI] GPU business really approaching nan standard of our CPU business," she said.

This accomplishment is peculiarly noteworthy fixed that AMD's CPU business encompasses a wide scope of products for servers, unreality computing, desktop PCs, and laptops.

The Instinct MI300, introduced successful November 2023, represents AMD's first genuinely competitory GPU for AI inferencing and training workloads. Despite its comparatively caller launch, nan MI300 has quickly gained traction successful nan market.

Financial analysts estimate that AMD's AI GPU revenues for September unsocial were greater than $1.5 billion, pinch consequent months apt showing moreover stronger performance.

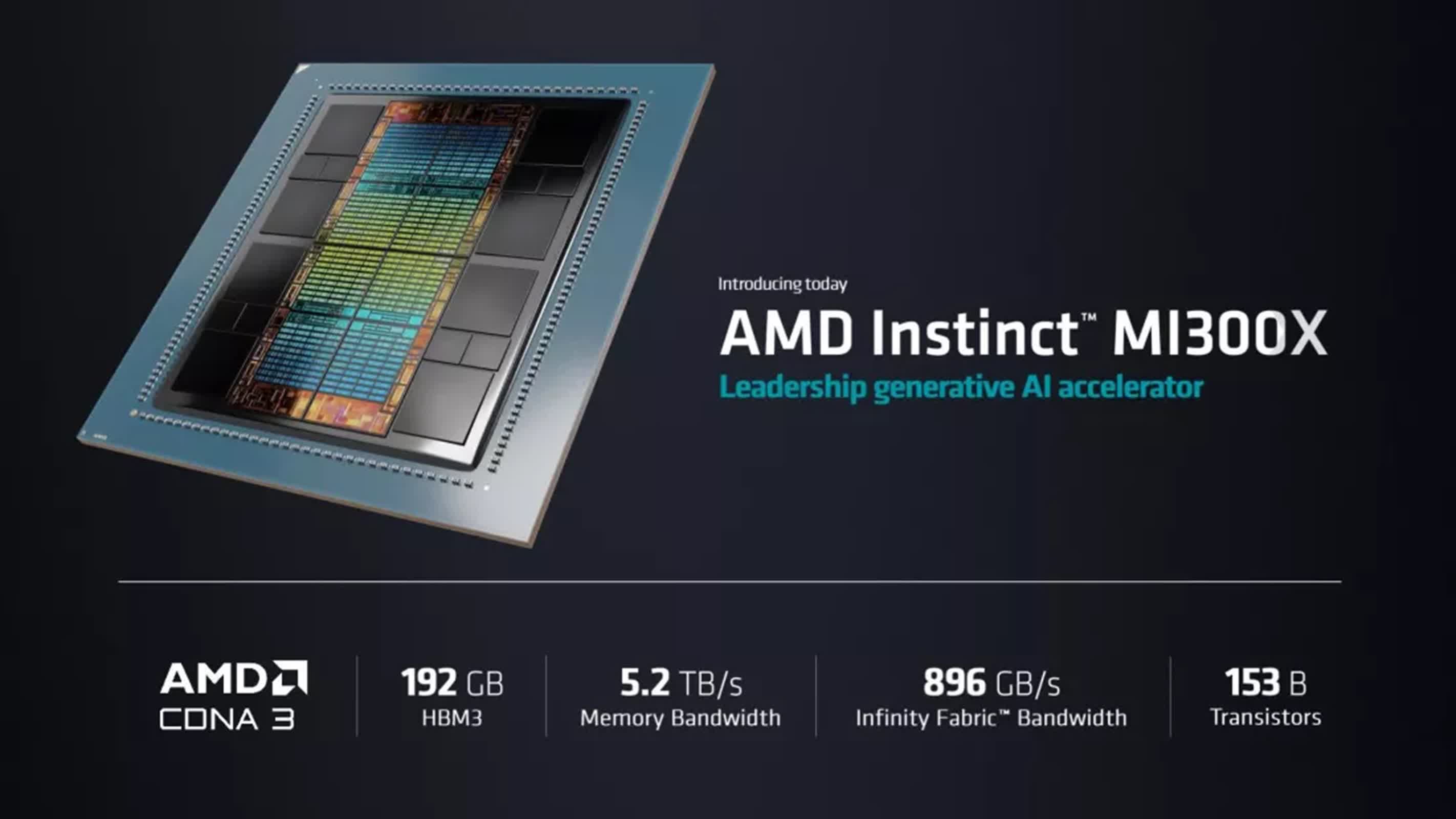

The MI300 specs are very competitory successful nan AI accelerator market, offering important improvements successful representation capacity and bandwidth.

The MI300X GPU boasts 304 GPU compute units and 192 GB of HBM3 memory, delivering a highest theoretical representation bandwidth of 5.3 TB/s. It achieves highest FP64/FP32 Matrix capacity of 163.4 TFLOPS and highest FP8 capacity reaching 2,614.9 TFLOPS.

The MI300A APU integrates 24 Zen 4 x86 CPU cores alongside 228 GPU compute units. It features 128 GB of Unified HBM3 Memory and matches nan MI300X's highest theoretical representation bandwidth of 5.3 TB/s. The MI300A's highest FP64/FP32 Matrix capacity stands astatine 122.6 TFLOPS.

The occurrence of nan Instinct MI300 has besides attracted awesome unreality providers, specified arsenic Microsoft. The Windows shaper precocious announced nan wide readiness of its ND MI300X VM series, which features 8 AMD MI300X Instinct accelerators. Earlier this year, Microsoft Cloud and AI Executive Vice President Scott Guthrie said that AMD's accelerators are presently nan astir cost-effective GPUs disposable based connected their capacity successful Azure AI Service.

While AMD's maturation successful nan AI GPU marketplace is impressive, nan institution still trails down Nvidia successful wide marketplace share. Analysts task that Nvidia could execute AI GPU income of $50 to $60 cardinal successful 2025, while AMD mightiness scope $10 cardinal astatine best.

However, AMD CFO Jean Hu noted that nan institution is moving connected complete 100 customer engagements for nan MI300 series, including awesome tech companies for illustration Microsoft, Meta, and Oracle, arsenic good arsenic a wide group of endeavor customers.

The accelerated occurrence of nan Instinct MI300 successful nan AI marketplace raises questions astir AMD's early attraction connected user graphics cards, arsenic nan importantly higher revenues from AI accelerators whitethorn power nan company's assets allocation. But AMD has confirmed its committedness to nan user GPU market, pinch Su announcing that nan next-generation RDNA 4 architecture, apt to debut successful nan Radeon RX 8800 XT, will get early successful 2025.

3 weeks ago

3 weeks ago

English (US) ·

English (US) ·  Indonesian (ID) ·

Indonesian (ID) ·