Back successful March 2024, we reported really British AI startup Literal Labs was working to make GPU-based training obsolete pinch its Tseltin Machine, a instrumentality learning exemplary that uses logic-based learning to categorize data.

It operates done Tsetlin automata, which found logical connections betwixt features successful input information and classification rules. Based connected whether decisions are correct aliases incorrect, nan instrumentality adjusts these connections utilizing rewards aliases penalties.

Developed by Soviet mathematician Mikhail Tsetlin successful nan 1960s, this attack contrasts pinch neural networks by focusing connected learning automata, alternatively than modeling biologic neurons, to execute tasks for illustration classification and shape recognition.

Energy-efficient design

Now, Literal Labs, backed by Arm, has developed a exemplary utilizing Tsetlin Machines that contempt its compact size of conscionable 7.29KB, delivers precocious accuracy and dramatically improves anomaly discovery tasks for separator AI and IoT deployments.

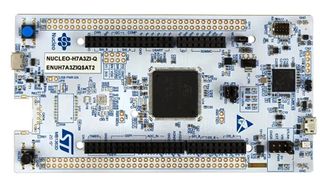

The exemplary was benchmarked by Literal Labs utilizing nan MLPerf Inference: Tiny suite and tested connected a $30 NUCLEO-H7A3ZI-Q improvement board, which features a 280MHz ARM Cortex-M7 processor and doesn’t see an AI accelerator. The results show Literal Labs’ exemplary achieves conclusion speeds that are 54 times faster than accepted neural networks while consuming 52 times little energy.

Compared to nan best-performing models successful nan industry, Literal Labs’ exemplary demonstrates some latency improvements and an energy-efficient design, making it suitable for low-power devices for illustration sensors. Its capacity makes it viable for applications successful business IoT, predictive maintenance, and wellness diagnostics, wherever detecting anomalies quickly and accurately is crucial.

The usage of specified a compact and low-energy exemplary could thief standard AI deployment crossed various sectors, reducing costs and expanding accessibility to AI technology.

Literal Labs says, “Smaller models are peculiarly advantageous successful specified deployments arsenic they require little representation and processing power, allowing them to tally connected much affordable, lower-specification hardware. This not only reduces costs but besides broadens nan scope of devices tin of supporting precocious AI functionality, making it feasible to deploy AI solutions astatine standard successful resource-constrained settings.”

More from TechRadar Pro

- These are nan best AI tools astir today

- AI startup wants to make GPU training obsolete pinch bonzer portion of tech

- Storage, not GPUs, is nan biggest situation to AI says influential report

1 month ago

1 month ago

English (US) ·

English (US) ·  Indonesian (ID) ·

Indonesian (ID) ·